How to Benchmark AI with GAIA Benchmark

In an age where artificial intelligence (AI) is not just a buzzword but a tangible force driving innovation, benchmarking the capabilities of AI Assistants has become crucial. The General AI Assistant (GAIA) emerges as a frontrunner, offering an unprecedented look into the efficiency and intelligence of these digital helpers. But why is this important? The answer lies not only in understanding the current state of AI but also in shaping its trajectory. As we harness AI to simplify tasks and make informed decisions, evaluating its performance is key to unlocking its full potential.

GAIA is not just another benchmark; it is a comprehensive framework designed to test AI Assistants in a way that mimics complex, real-world tasks. It stands as a testament to how far AI has come and a predictor of how it will evolve. Through GAIA, we can discern the nuances of AI's problem-solving skills, its adaptability, and its readiness to tackle the intricate challenges posed by human inquiries.

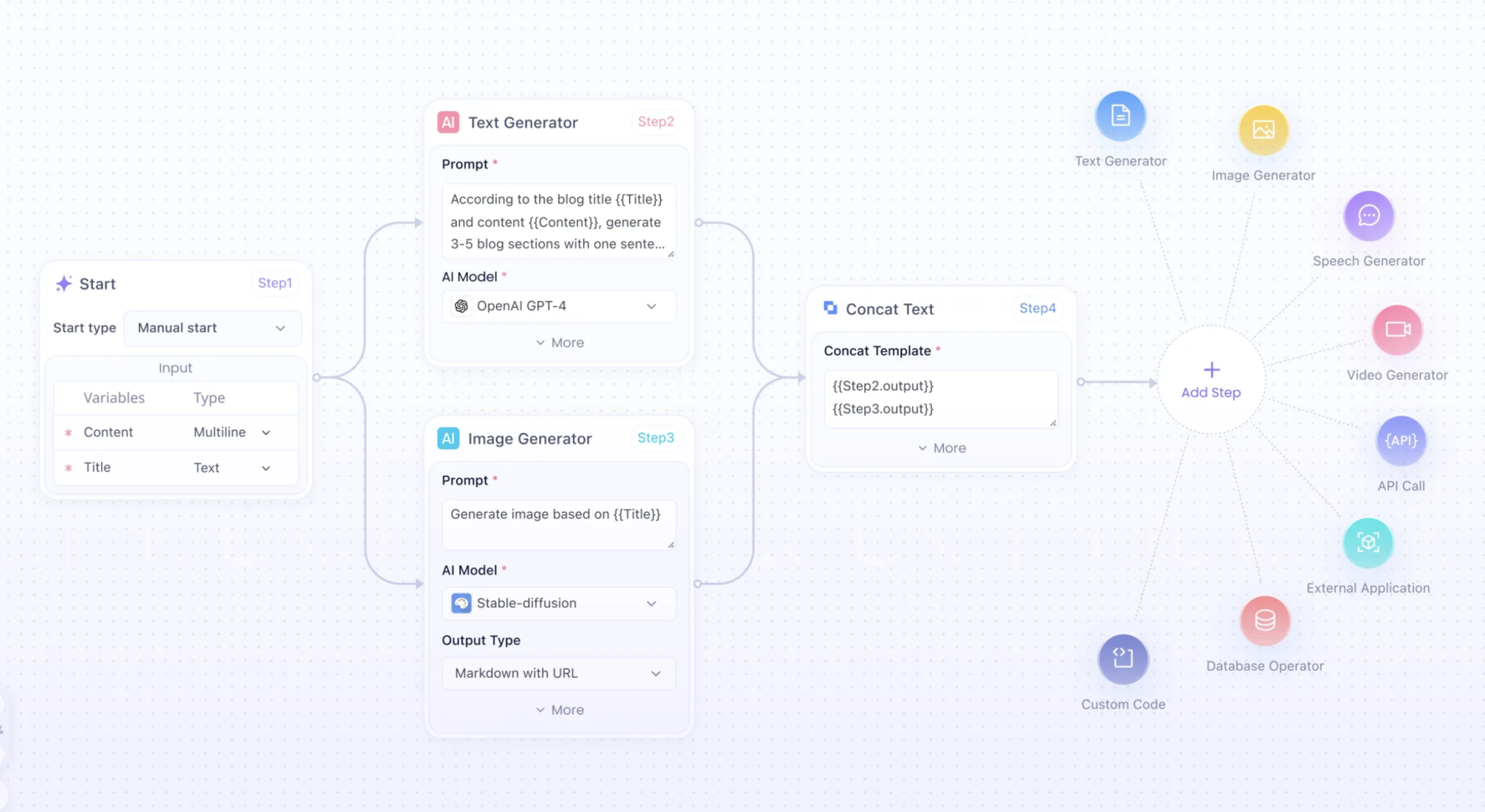

You might want to try out a No-Code platform, that can help you create an AI App, using almost any popular AI Model, such as GPT-4, Claude, Midjourney, Stable Diffusion...

Try out Anakin AI to find out!

What is GAIA Benchmarking?

At its core, GAIA benchmarking is the process of evaluating the performance of AI Assistants against a series of tasks and scenarios that require a range of cognitive abilities. It's a rigorous assessment that mirrors the multifaceted nature of human questioning and interaction. GAIA benchmarking is designed to push the boundaries of what we expect from AI, examining not just accuracy but the ability to navigate complex, layered queries.

This benchmarking framework is structured around three levels of difficulty, each representing a more sophisticated understanding and manipulation of information. It covers everything from basic fact retrieval to advanced reasoning, multi-modal understanding, and even the use of tools like web browsers. But why do we need such a comprehensive measure? Because the future of AI is not in executing simple commands, but in understanding and acting upon complex, ambiguous, and often unpredictable human language.

How Does GAIA's Benchmarking Work?

Understanding GAIA's benchmarking approach requires a deep dive into its philosophy and mechanics. GAIA stands apart by not only measuring the 'what' in terms of correct answers but also the 'how' in terms of approach and reasoning. It's akin to evaluating a student not just on the answer they provide but on their work showing how they arrived at it.

- Structured Evaluation: GAIA categorizes questions into levels, with each subsequent level representing an increase in complexity and cognitive demand.

- Diverse Metrics: It employs a range of metrics to assess an AI's proficiency, such as accuracy, reasoning, and time taken to respond.

- Real-world Scenarios: The tasks mimic real-world applications, testing an AI's ability to understand and operate in the human world.

In the realm of AI Assistants, this approach is revolutionary. It moves away from the siloed, one-dimensional tests of the past and embraces a holistic, multi-dimensional evaluation. Let's delve into the intricate details and numbers that define this benchmarking process.

GAIA Benchmarking AI: LLM vs Human vs Search Engine

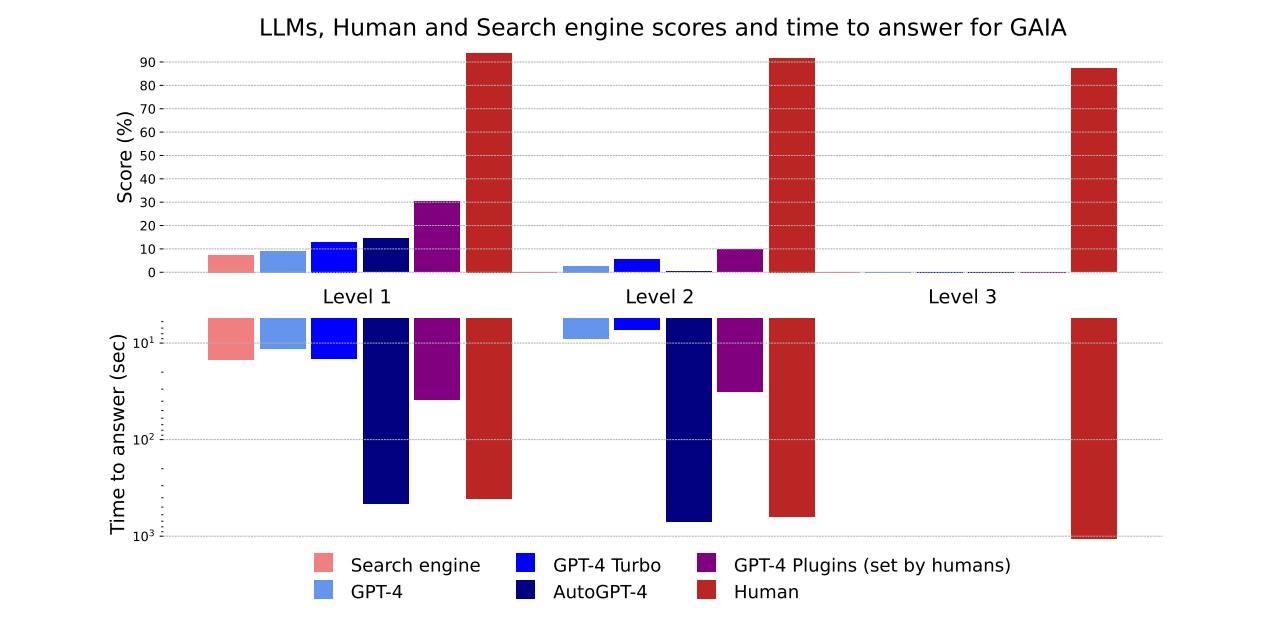

Diving into GAIA's performance across different levels offers a granular view of where AI assistants shine and where they falter. The transition from Level 1 to Level 3 is akin to moving from a well-paved road to a winding mountain pass—it tests agility, robustness, and the ability to handle unexpected turns.

When we analyze AI performance using GAIA benchmark results, the numbers tell a compelling story. The image in question breaks down the results into three distinct levels of complexity, revealing how various AI models, including GPT-4, GPT-4 Turbo, AutoGPT-4, and human-assisted GPT-4 plugins, stack up against human performance and traditional search engines.

Score Comparison:

- Level 1 Results: In the simplest tasks, AI models show promise, with some even outperforming search engines. However, they still trail behind human benchmarks.

- Level 2 and Level 3 Challenges: As the complexity increases, the AI scores generally trend lower. The gap between human performance and AI widens, underscoring the challenge of nuanced tasks.

Response Time Insights:

- Rapid AI Responses: Across all levels, AI tends to provide quicker responses than humans, a testament to their computational speed.

- Human Precision: Despite taking longer, human responses are more accurate, especially at higher levels, indicating a deeper level of processing and understanding.

Strategic Implications:

- Tailoring AI Development: The results serve as a roadmap for developers, highlighting the need for AI that can mimic the depth of human cognition.

- Benchmarking as a Tool: Such comparative analysis is vital for understanding where AI excels, where it falters, and how future iterations can be improved.

What Does GIGA Benchmark Says About GPT-4?

Exploring the implications of GPT-4's results through GAIA benchmarking paints a picture of where AI might head next. GPT-4's performance is not just a score; it's a beacon showing the way forward, highlighting both achievements and pitfalls.

Understanding Context and Nuance:

- GPT-4 demonstrates significant advancements in understanding context, yet it's the subtleties of human language where it stumbles, as the data reveals.

- The nuances of idiomatic expressions, sarcasm, and cultural references present challenges that are not just technical but also linguistic and sociological.

The Boundaries of Knowledge:

- GAIA scores indicate that while GPT-4 can access a vast repository of information, its ability to discern the most relevant and current data can be improved.

- This suggests a need for better indexing of information and more sophisticated algorithms for data retrieval and application.

Collaboration with Human Intelligence:

- Instances where GPT-4's performance increases with human guidance (via plugins set by humans) suggest a symbiotic future for AI and human collaboration.

- It underscores the potential for AI to augment human capabilities rather than replace them, with each complementing the other's strengths.

This section would include specific examples from GPT-4's performance metrics, discussing how these results impact the development of future AI models. The narrative would be grounded in data yet elevated by the implications, maintaining a balance between technical detail and big-picture thinking.

The Role of Human-Assisted AI (GPT-4 Plugins)

Delving into the role of human-assisted AI, particularly GPT-4 plugins, we unravel the synergistic potential between human ingenuity and artificial intelligence.

Enhancing AI Capabilities:

- Plugins programmed by humans can expand the capabilities of AI, enabling it to perform tasks that go beyond its default programming.

- This collaboration can lead to more creative, flexible, and context-aware AI responses, as evidenced by improved performance in GAIA benchmarks.

Customization and Personalization:

- Human-assisted AI can be tailored to specific domains or tasks, allowing for a more personalized approach to problem-solving.

- The data shows that when GPT-4 operates with plugins, its adaptability to user-specific needs and contexts is significantly enhanced.

Future Directions for AI Development:

- The success of human-assisted AI models points to a future where AI development is increasingly user-centric, with a focus on customizable and adaptable systems.

- By examining the successes and limitations of these plugins, developers can better understand how to build AI that complements human intelligence more effectively.

This segment would highlight how human assistance can bridge the current gaps in AI capabilities, using concrete examples from the GAIA benchmarks. The content would be rich with insights, providing readers with a clear understanding of how this collaboration works and its benefits.

Benchmarking Human vs. AI Using GAIA - Really?

GAIA's benchmarking results lay bare the stark contrasts between human and machine. It's not just a contest of accuracy but a measure of approach, creativity, and adaptability. The human brain, with its millennia of evolution, is pitted against the decade-spanning evolution of AI—a battle of nature's design against human ingenuity.

Speed and Precision:

- AI assistants can outpace humans in straightforward tasks, where speed is matched with precision.

- Yet, in more complex scenarios, the human ability to quickly grasp nuances and read between the lines becomes evident.

The Creativity Gap:

- Humans bring creativity to problem-solving, often finding unique shortcuts and solutions that AI, bound by its programming, cannot.

- The results show that while AI can learn and adapt, there remains an 'intuition gap' that human experience fills.

Understanding Context:

- Humans excel in understanding context and subtleties in language, something AI is learning, but not yet mastering.

- This is vividly reflected in the results where context-heavy tasks see a dip in AI performance.

The unveiling of these results in the article would involve detailed tables and charts, highlighting the percentages, response times, and efficiency of each entity across GAIA's levels. The narrative would emphasize not just the numbers but the stories behind them—why does AI struggle with certain tasks, and how can it potentially overcome these hurdles?

The insights from GAIA's data tell us why humans still excel in areas where AI is lagging. It's a dance of cognitive abilities, emotional intelligence, and the intrinsic human trait of adaptability that sets us apart.

Cognitive Flexibility:

- Humans can pivot their approach based on the context, a skill AI is still developing.

- The benchmarks highlight this flexibility, especially in tasks requiring understanding of nuanced phrasing or ambiguous information.

Emotional Intelligence:

- Reading emotions and reacting accordingly is a distinctly human trait. While AI can mimic this to some extent, it lacks the genuine empathetic response humans naturally possess.

- The data often shows higher human performance in tasks requiring emotional discernment.

Adaptability:

- The human ability to adapt to new information and situations is evident in the benchmarks. When faced with a novel problem, humans can draw from a diverse array of experiences to find a solution.

- AI, on the other hand, is confined to its training, which can become a limitation in uncharted scenarios.

In writing this section, the article would delve into real-world examples and case studies that support these insights, making the content not only technical but also relatable and engaging for the reader.

What the GAIA Benchmarks Tell Us About AI's Future

The GAIA benchmarks serve as a crystal ball, providing insights into the trajectory of AI development. These benchmarks are not merely scores; they encapsulate the progress AI has made and hint at the milestones yet to be achieved.

AI’s Progression:

- The benchmarks map AI’s journey from simple question-answering to complex problem-solving, showcasing how models like GPT-4 are edging closer to human-like understanding.

- The progression observed in GAIA benchmarks from Level 1 to Level 3 demonstrates AI's growing sophistication and hints at its potential to handle increasingly complex tasks.

Bridging the Gap:

- While AI assistants excel in data processing and pattern recognition, GAIA benchmarks highlight the gap in areas requiring emotional intelligence and cultural awareness.

- The future of AI lies in bridging this gap, possibly through the integration of more nuanced language models and emotional datasets.

AI’s Role in Society:

- The benchmarks also reflect on the societal role of AI, suggesting a future where AI could work alongside humans in more collaborative and assistive capacities.

- The data points to a need for ethical considerations and guidelines to ensure AI’s growth benefits society as a whole.

This section would be enriched with projections and forward-looking statements, supported by GAIA's comprehensive data. It would not only provide a retrospective look but also chart a path forward, offering readers a sense of where AI might integrate into their daily lives and industries.

Lessons Learned and the Path Forward

Reflecting on the lessons learned from GAIA benchmarks and the path forward, we conclude that the journey of AI is one of continuous learning and adaptation.

Adaptation to Change:

- AI must evolve to keep pace with the dynamic nature of human language and behavior, as the benchmarks clearly indicate.

- The lessons learned from GAIA’s evaluations point towards an AI that can adapt to changes and learn from new experiences, much like humans.

Continuous Learning:

- The benchmarks suggest that future AI models will need mechanisms for continuous learning, allowing them to update and refine their knowledge base and algorithms.

- This could involve real-time learning from interactions, feedback, and environmental changes, ensuring that AI remains relevant and effective.

Collaboration with Humans:

- A key takeaway from the benchmarks is the potential for AI to enhance human capabilities, not replace them.

- The path forward is likely to involve more synergistic relationships between AI and humans, where each complements the other’s strengths.

This final section before the conclusion would summarize the core findings from the GAIA benchmarks, distilling them into actionable insights. It would outline the steps that developers, researchers, and policymakers might take to guide AI's evolution responsibly.

Conclusion: GAIA's Benchmarking Legacy

In conclusion, GAIA's benchmarking is set to be a cornerstone in the history of AI development. It has established a new standard for assessing AI Assistants, one that goes beyond simple tasks to embrace the full spectrum of human cognitive abilities.

You can read more about the Paper here.

from Anakin Blog anakin.ai/blog/gaia-benchmark/

via IFTTT

via Anakin anakin0.blogspot.com/2023/1...