Neural-chat-7b-v3-1: Can Intel's Model Beat GPT-4?

In the rapidly evolving landscape of artificial intelligence (AI), Intel has made significant strides with its latest creation: the neural-chat-7b-v3-1 model. This AI model is not just another incremental improvement; it represents a substantial leap in the way machines understand and interact in human language. The neural-chat-7b-v3-1 is Intel's answer to the growing demand for sophisticated, conversational AI that can seamlessly integrate into various aspects of our digital lives, from customer service to personal assistants.

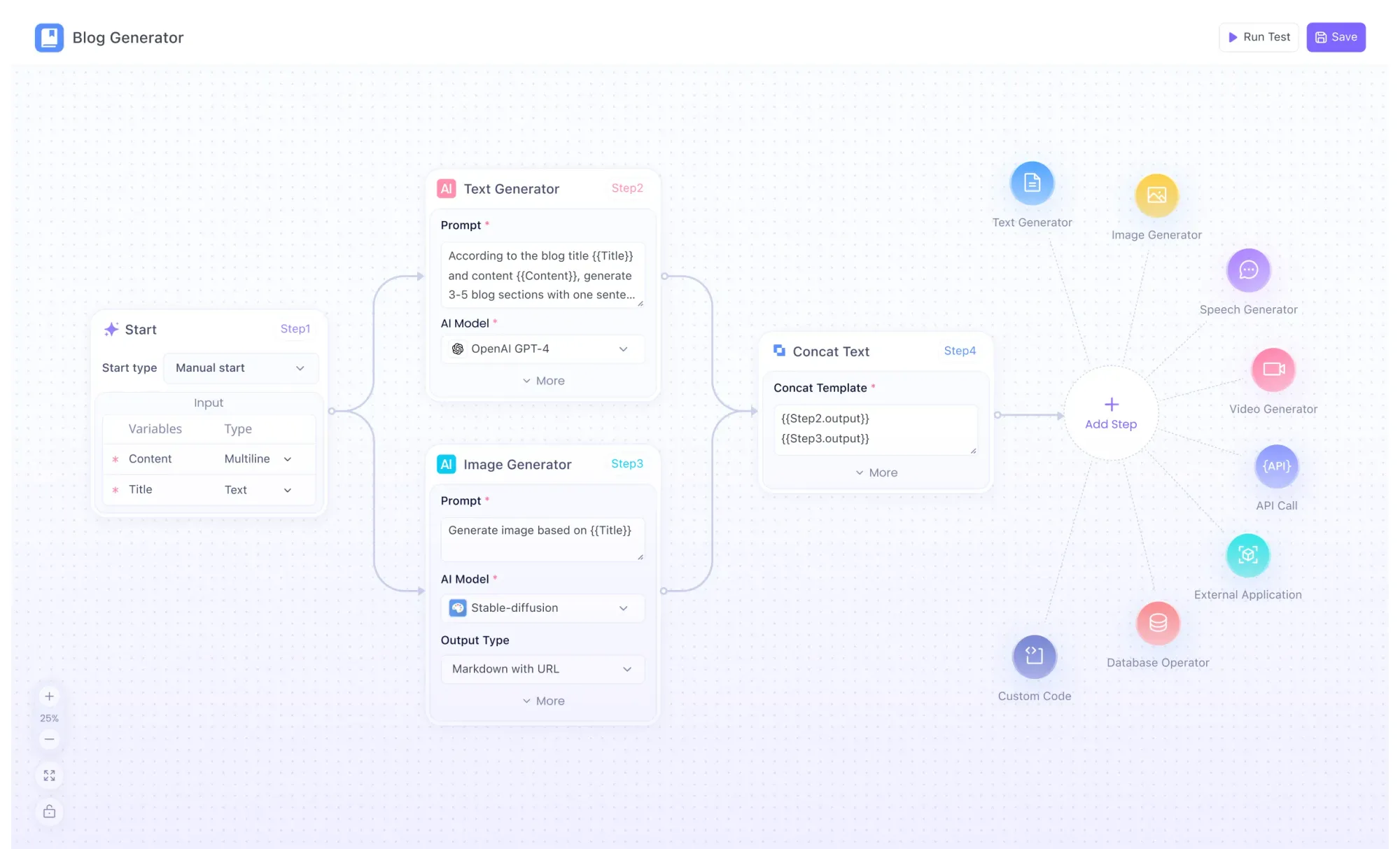

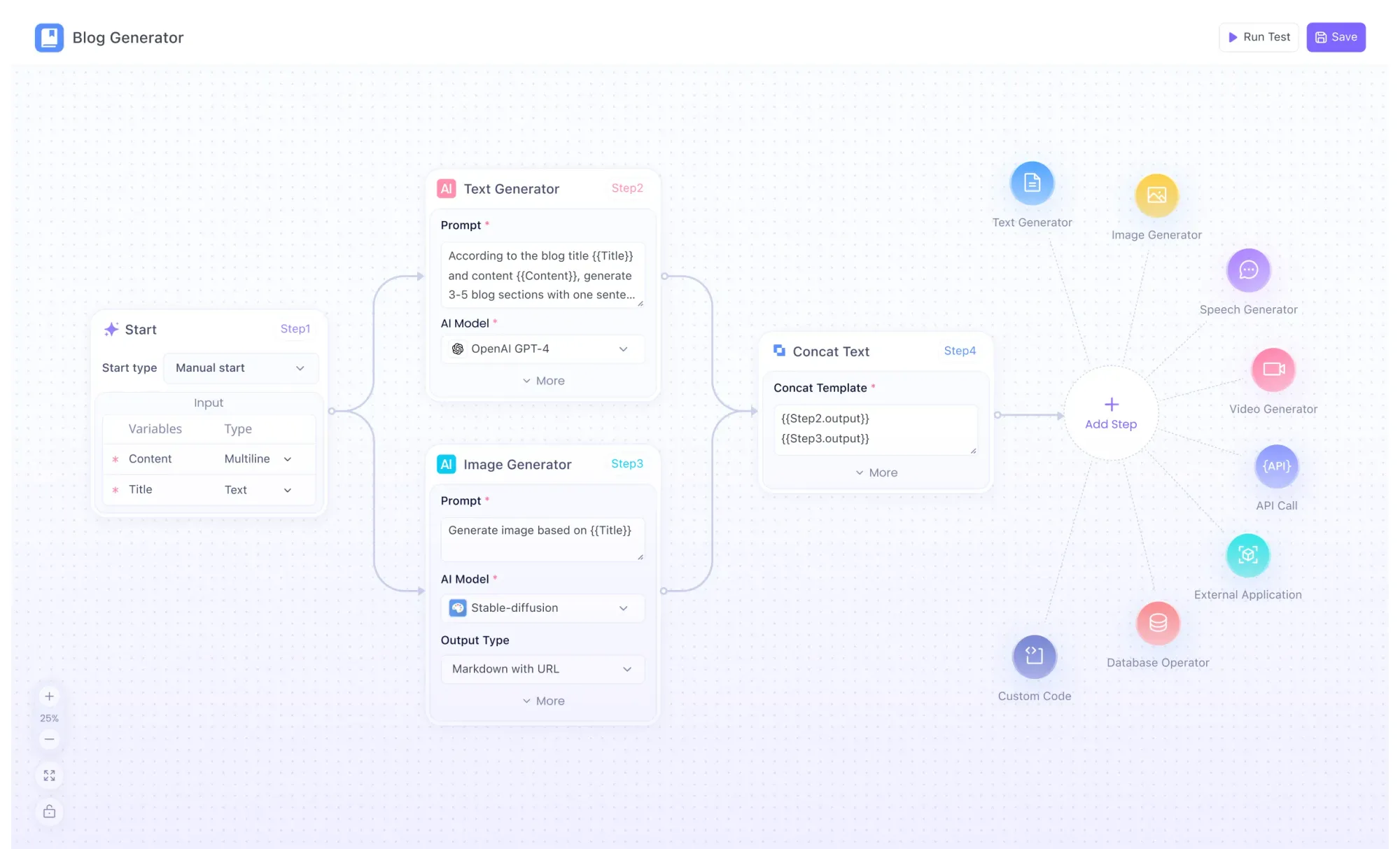

Anakin AI can help you easily create any AI app with highly customized workflow, with access to Many, Many AI models such as GPT-4-Turbo, Claude-2-100k, API for Midjourney & Stable Diffusion, and much more!

Interested? Check out Anakin AI and test it out for free!👇👇👇

What is Intel's Neural Chat Model?

Intel's Neural Chat Model, known as neural-chat-7b-v3-1, stands at the forefront of AI-driven conversational models. It's designed to mimic human-like interactions, offering responses that are not only accurate but contextually relevant and nuanced. This model is part of a new wave of AI, where the focus is on understanding and generating human language in a way that feels natural and effortless.

Advantages of Neural Chat Model

The neural-chat-7b-v3-1 is built on a foundation of sophisticated machine learning algorithms and neural networks. It leverages a combination of vast datasets and advanced training techniques to achieve a level of conversational ability that was previously unattainable. Key aspects include:

- Language Understanding: The model's ability to parse and understand human language, including nuances, idioms, and varied sentence structures.

- Contextual Awareness: Unlike simpler chatbots, this model can maintain the context of a conversation, leading to more coherent and relevant responses.

- Adaptive Learning: Over time, the model learns from interactions, continuously improving its accuracy and relevance.

How is Neural-Chat-7b-v3-1 Trained

The training of neural-chat-7b-v3-1 is a complex, multi-layered process, involving high-quality, large-scale datasets. The model is not just 'programmed' in the traditional sense; it's 'taught' through exposure to vast amounts of text and iterative refinement.

At its core, the training process involves the following steps:

- Base Model Selection: The journey begins with a base model, which in this case is the Mistral-7B-v0.1. This model provides the foundational architecture and pre-trained capabilities that form the starting point for further refinement.

- Fine-Tuning with DPO Algorithm: The model then undergoes fine-tuning using the Direct Preference Optimization (DPO) algorithm. This step tailors the model's responses to be more aligned with human preferences, enhancing its conversational abilities.

- Dataset Utilization: The Open-Orca/SlimOrca dataset plays a crucial role in training. This open-source dataset provides a rich variety of conversational scenarios and language structures, enabling the model to learn a wide range of dialogue patterns.

Learning Rate and Batch Sizes: With a learning rate of 1e-04 and specific train and eval batch sizes, the model strikes a balance between learning efficiency and computational demands.

Optimizer and Scheduler: The Adam optimizer, known for its effectiveness in handling large datasets, is coupled with a cosine learning rate scheduler, providing a dynamic adjustment of learning rates during training.

Training Period: Spanning between September and October 2023, this period was critical for iterative improvements and refinements of the model.

Neural-chat-7b-v3-1 is not just another chatbot; it's a sophisticated AI conversationalist. its fundamental workings to its practical applications. The content is structured to be accessible yet informative, ensuring both clarity and depth in explaining this advanced AI technology.

Benchmarks of Neural-Chat-7b-v3-1

Evaluating the performance of an AI model like neural-chat-7b-v3-1 is crucial to understand its efficacy and areas of application. Benchmarks are essential tools that provide insights into the model's capabilities, helping us gauge its conversational accuracy and responsiveness.

Performance Metrics and Improvements

Intel's neural-chat-7b-v3-1 has shown significant improvements over its predecessors in various benchmark tests. The model was evaluated on the open_llm_leaderboard, a platform that assesses AI models across multiple tasks. Here's a snapshot of its performance:

- Overall Score: The model achieved an average score increase, reflecting its enhanced ability to handle diverse conversational scenarios.

- Task-Specific Improvements: In tasks such as ARC, HellaSwag, and MMLU, the model demonstrated notable improvements, indicating its refined understanding and response generation abilities.

What These Benchmarks Mean

These benchmark results are not just numbers; they represent the model's growth in understanding human language and context. Improved scores in these benchmarks correlate directly to a more human-like, nuanced, and effective conversational AI.

How to Install Neural Chat 7B Model on Windows/Mac

Installing Intel's Neural Chat 7B Model, specifically the neural-chat-7b-v3-1 version, is a straightforward process whether you are using a Windows or Mac system. This section provides a detailed guide on setting up this advanced AI model on your computer.

Prerequisites

Before diving into the installation process, ensure your system meets the following requirements:

- A stable internet connection.

- Adequate storage space for downloading the model and related files.

- A compatible Python environment.

Also make sure that:

- Ensure all dependencies are correctly installed.

- Check your Python version compatibility.

- If using a virtual environment, verify it's activated before running the model.

Step-by-Step Installation Guide

Follow these steps to install the Neural Chat 7B Model on your Windows or Mac computer:

Python Environment Setup: If you haven’t already, install Python on your system. A virtual environment is recommended to avoid conflicts with other Python projects.

python3 -m venv neural-chat

source neural-chat/bin/activate # For Mac

.\neural-chat\Scripts\activate # For Windows

Install Necessary Packages: Next, install the required libraries such as torch, transformers, and accelerate. These libraries are crucial for running the model.

pip install torch transformers accelerate- Downloading the Model: The model can be downloaded from its Hugging Face repository page. Choose the appropriate quantization version based on your system's capabilities. The higher the quantization, the better the quality of responses, but it also requires more system resources.

Load the Model: After downloading the model, load it into your Python environment. If you're using a tool like LM Studio, follow its specific instructions for loading models.

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = 'Intel/neural-chat-7b-v3-1'

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

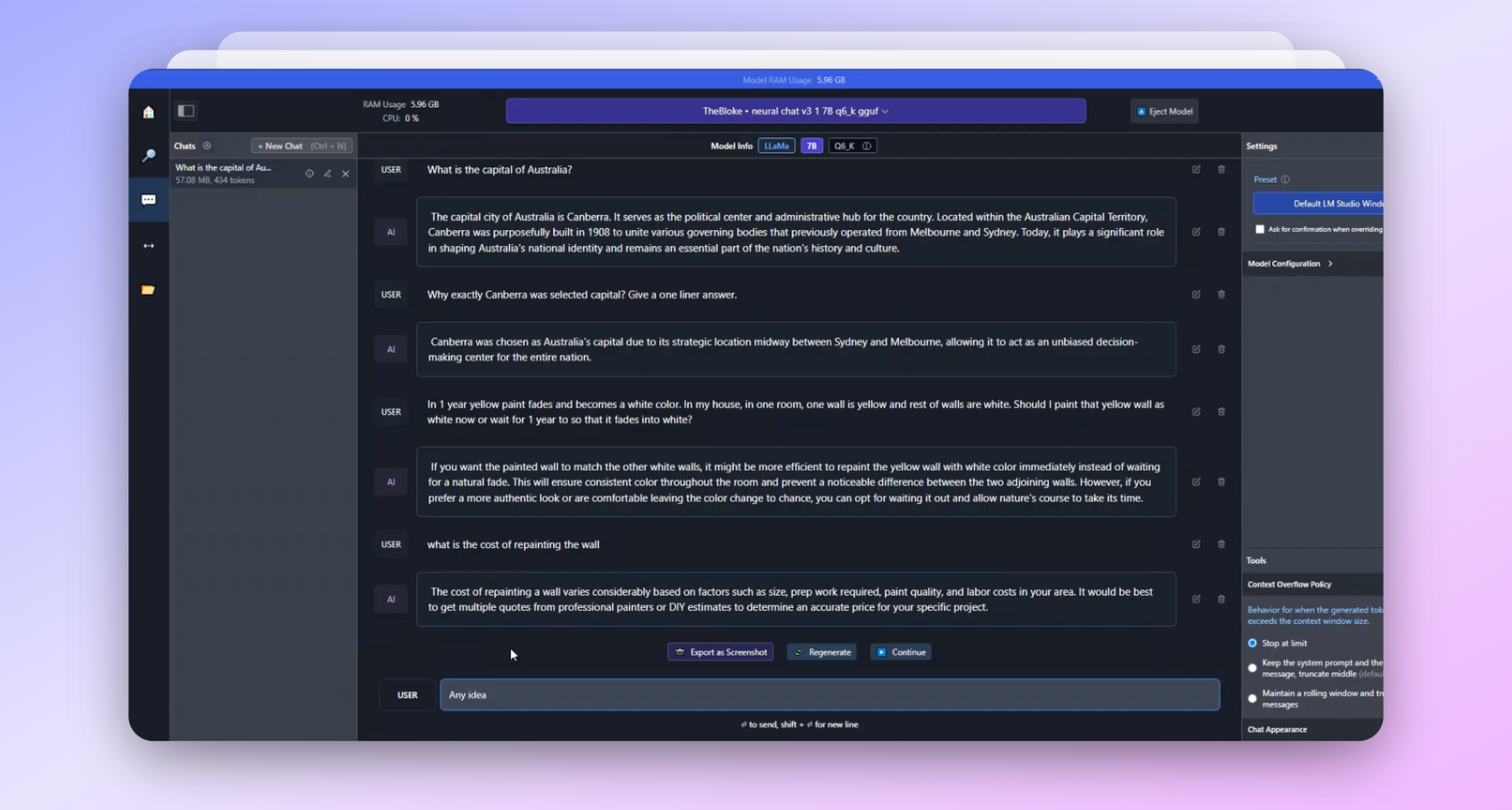

Testing the Model: Once the model is loaded, you can start interacting with it. Test it by asking simple questions or prompts and observe its responses.

# Example prompt

prompt = "What is the capital of Australia?"

response = generate_response(model, tokenizer, prompt)

print(response)

How Much RAM Do I Need to Run Neural-chat-7b-v3-1?

While running the model, keep an eye on your system’s resource consumption, especially the memory usage. Models like neural-chat-7b-v3-1 can be resource-intensive, so ensure your system has enough RAM and GPU capabilities to handle it efficiently.

Once you're comfortable with the basic setup, you can explore more advanced features of the model. Customize prompts, experiment with different settings, and integrate the model into larger projects to leverage its full potential.

Conclusion

Intel's Neural Chat 7B Model, particularly the neural-chat-7b-v3-1 version, represents a significant advancement in the field of AI and conversational models. Its ability to understand and generate human-like responses opens up vast opportunities across various sectors. From enhancing customer service experiences to assisting in education and personal wellness, the potential applications of this model are vast and varied.

As AI continues to evolve, models like the Neural Chat 7B will undoubtedly play a crucial role in shaping our interaction with technology, making digital experiences more seamless, personalized, and engaging. The future of AI conversations looks promising, and the Neural Chat 7B Model is at the forefront of this exciting journey.

Anakin AI can help you easily create any AI app with highly customized workflow, with access to Many, Many AI models such as GPT-4-Turbo, Claude-2-100k, API for Midjourney & Stable Diffusion, and much more!

Interested? Check out Anakin AI and test it out for free!👇👇👇

FAQs about Intel's Neural Chat 7B Model

What is Intel's Neural Chat 7B Model?

- Intel's Neural Chat 7B Model, particularly the neural-chat-7b-v3-1 version, is an advanced AI conversational model designed to understand and interact in human language. It uses sophisticated machine learning algorithms to provide nuanced and context-aware responses, making it a significant advancement in AI conversational technology.

How do I install the Neural Chat 7B Model on my computer?

- To install the Neural Chat 7B Model on Windows or Mac, you need to set up a Python environment, install necessary packages like torch and transformers, download the model from its Hugging Face repository, and then load it into your Python environment for use. Detailed steps can be found in the main article.

How resource-intensive is the Neural Chat 7B Model?

- The Neural Chat 7B Model can be resource-intensive, particularly in terms of memory usage. It requires a system with adequate RAM and, ideally, GPU capabilities for optimal performance. Users should consider their system specifications before installation and use.

from Anakin Blog anakin.ai/blog/intels-neura...

via IFTTT

via Anakin anakin0.blogspot.com/2023/1...