Claude 2 vs GPT-4 vs Bard: Who's the Best AI for Writing?

In the fast-paced world of AI technology, three chatbots have emerged as frontrunners: Claude 2, GPT-4, and Bard. They’re revolutionizing how we interact with machines, but the burning question remains: Who leads the pack? Join us as we unveil the strengths and weaknesses of these AI titans in a showdown that’s setting the digital world abuzz.

The race to lead in the AI chatbot space is a clash of titans — Claude 2, GPT-4, and Bard — each a powerhouse in its own right. They're transforming how we interact with machines, whether it's for solving complex equations or having a casual chat. The spotlight is on these AI marvels, but the question on everyone's mind is: Who is the best AI for writing? Let's find out:

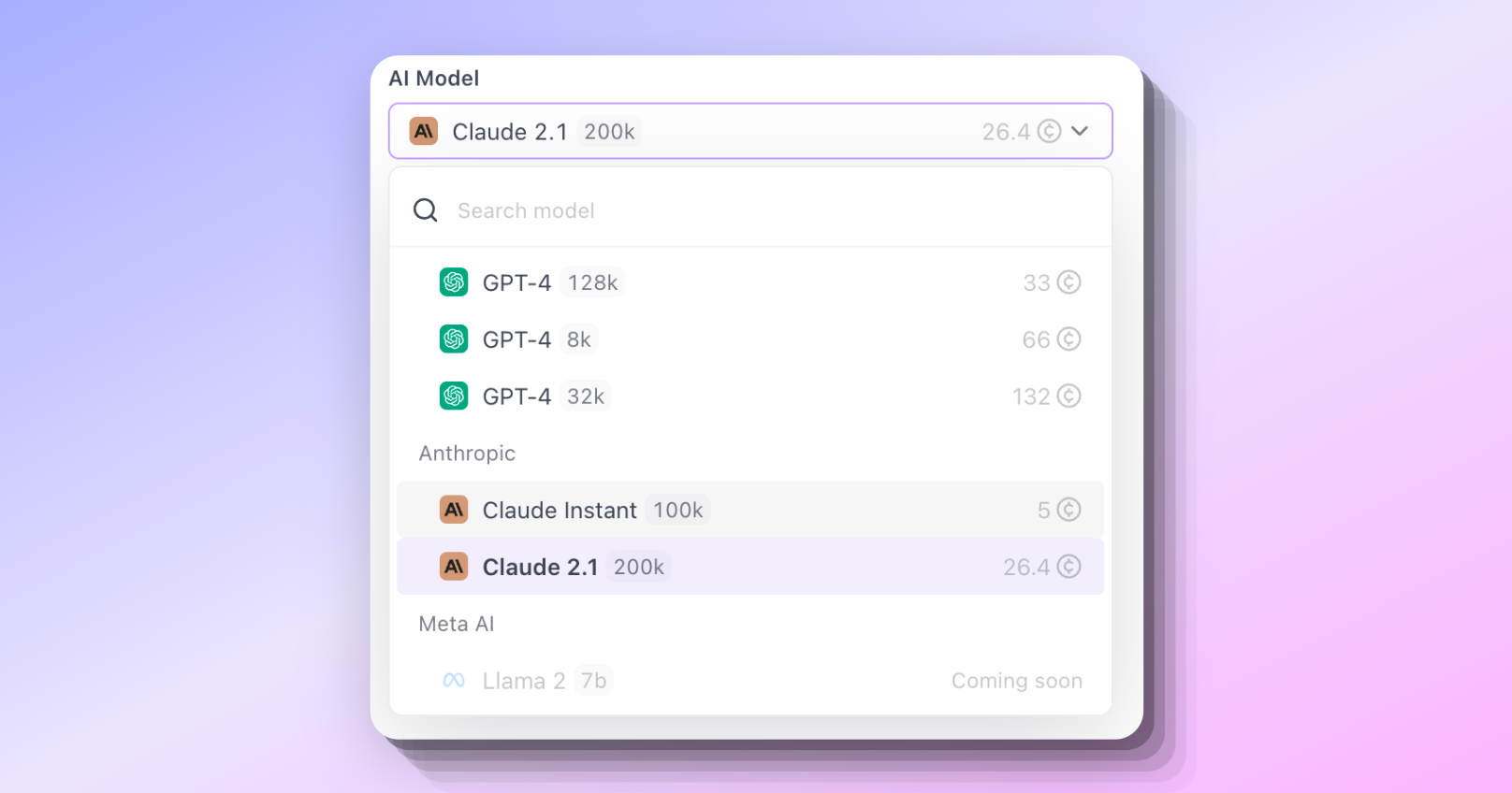

But before we get started, you can actually try Claude and ChatGPT for content writing for youself at Anakin AI:

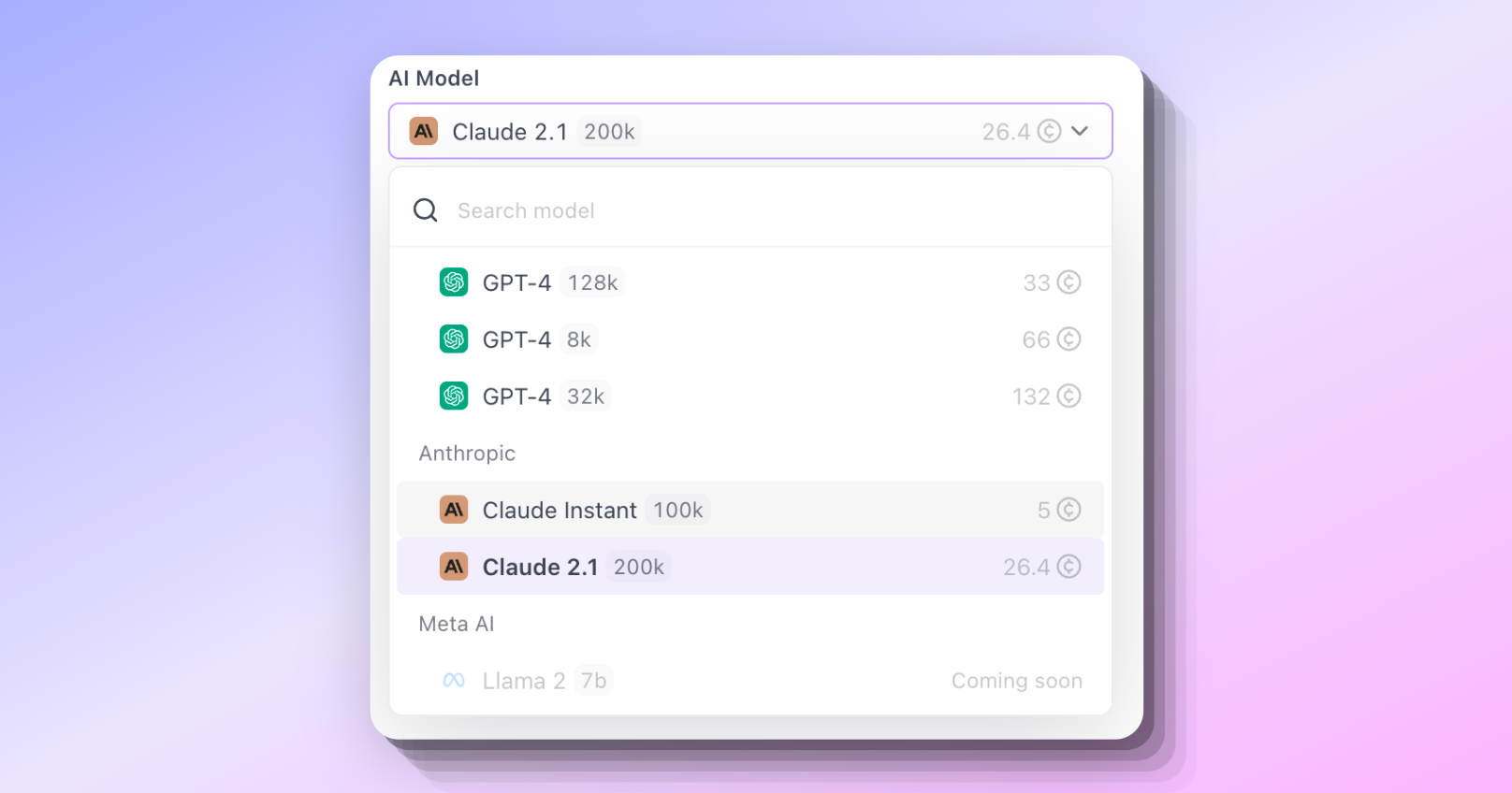

Anakin AI supports all the popular AI models, including the latest ones such as gpt-4-turbo, claude-2.1 with 200k token context window.

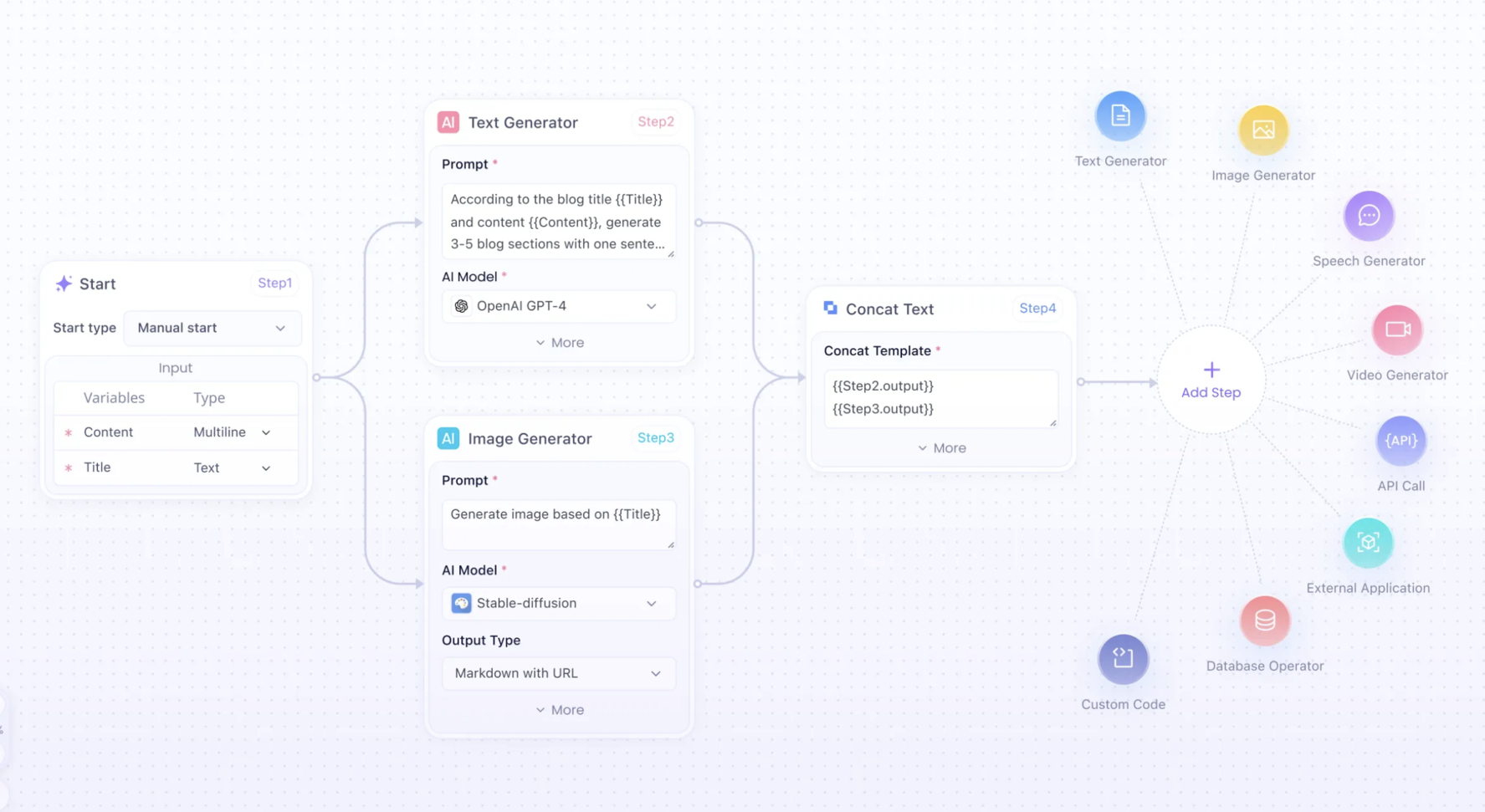

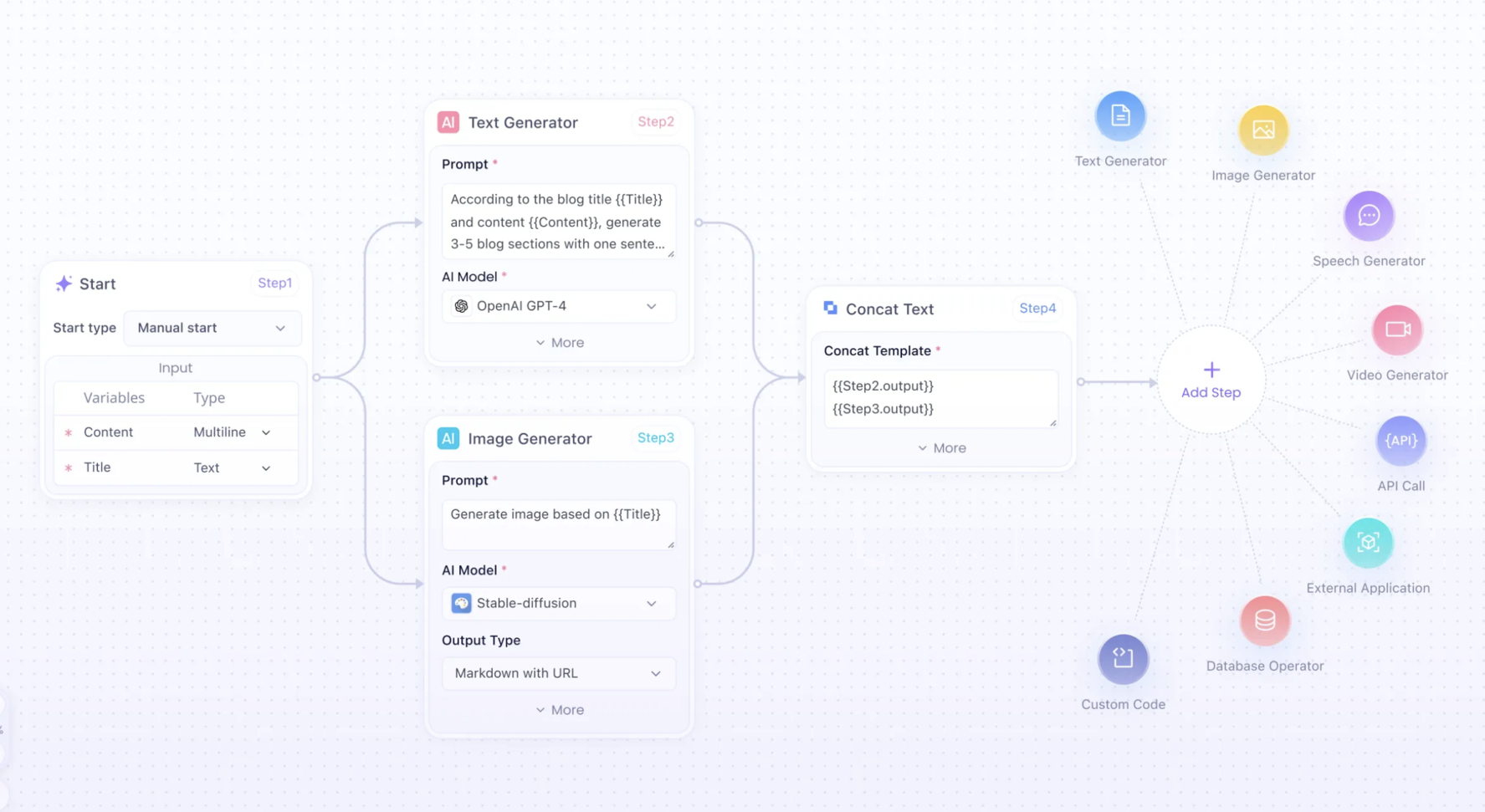

Built on the back of these AI models, you can create any AI App work flow with No Code at Anakin AI.

Interested? Build your own GPT-4/Claude app with Anakin AI now!👇👇👇

Who Wins: Claude 2, GPT-4, or Bard? Let's Compare!

Claude 2 vs GPT-4 vs Bard – Compare the Accuracy

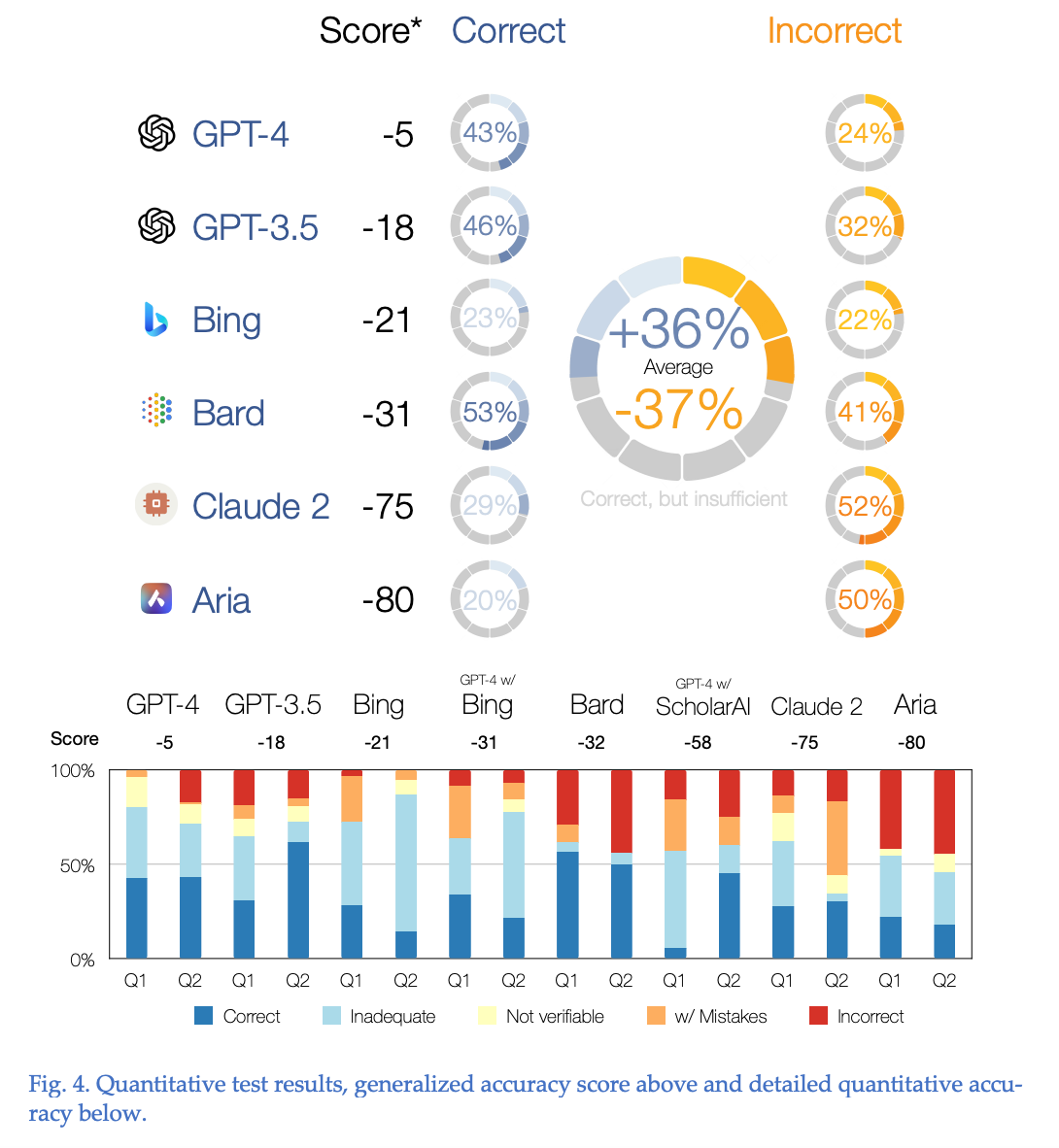

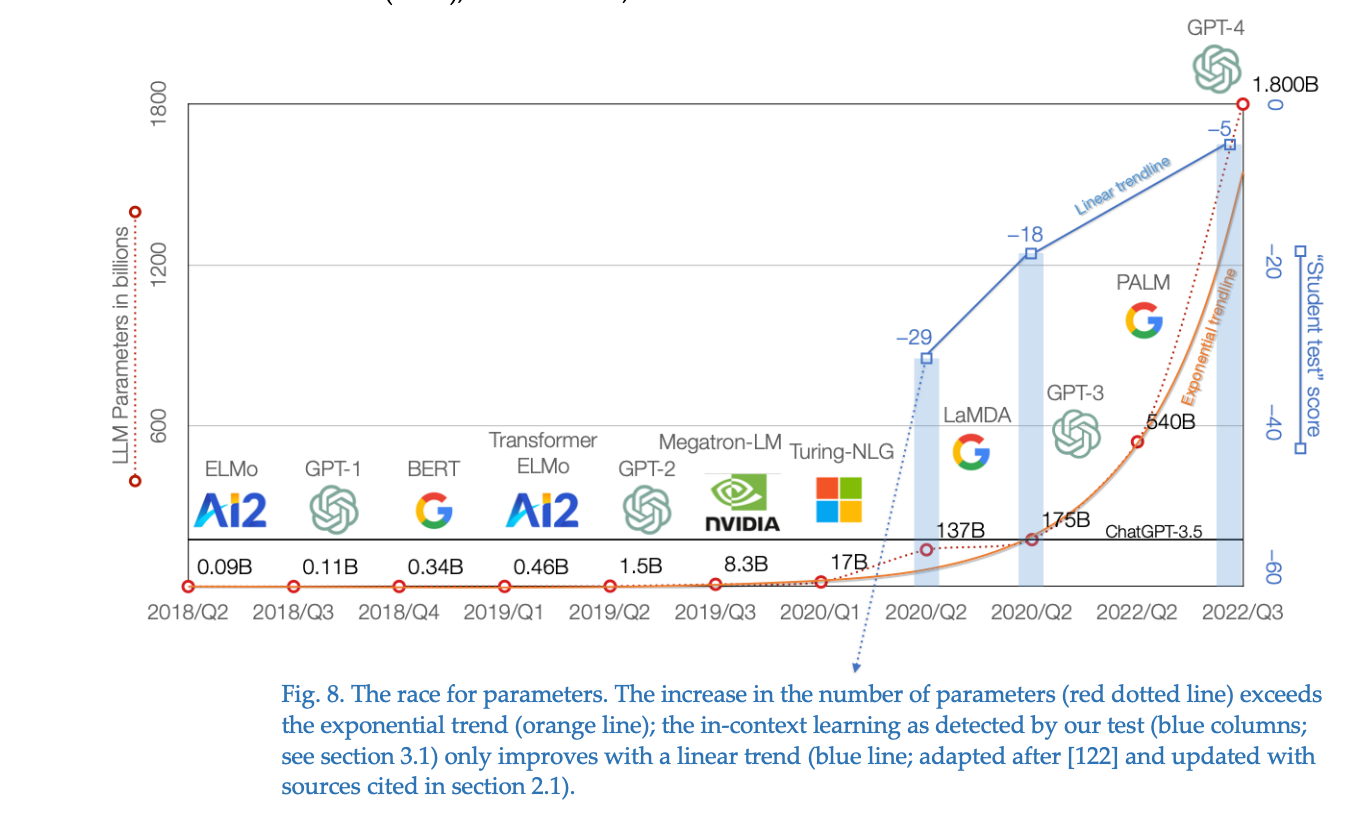

Source of the data: ChatGPT v Bard v Bing v Claude 2 v Aria v human-expert. How good are AI chatbots at scientific writing?

The score in the context of the AI chatbot study is a composite measure that reflects the accuracy and reliability of the chatbots' responses to scientific prompts. Here's a detailed breakdown of what the score represents and how it should be interpreted:

-

Score Calculation: The score is derived from the percentage of correct responses given by the AI minus twice the percentage of incorrect responses. In a formula, it looks like this:

Score = % Correct - (2 x % Incorrect). - Interpreting the Score:

- A higher score indicates a better performance, with more correct answers and fewer mistakes.

- A lower or negative score suggests that the AI produced more incorrect information relative to correct responses.

- A score of zero or above would be indicative of an AI that, on balance, provides more accurate than inaccurate information—a benchmark that one might expect from a human expert in a similar test setting.

For instance, GPT-4's score of -5 suggests that while it had a substantial number of correct answers (43%), the incorrect ones (24%) were significant enough to lower its overall score but not drastically. This is much closer to what one would expect from a human expert compared to the other AIs.

Now, let's put these scores into perspective with the specifics:

- GPT-4's Performance: With a score of -5, GPT-4 shows a relatively balanced ratio of correct to incorrect responses. This indicates a strong grasp of the subject matter, albeit with some inaccuracies that impact its score. The 43% correctness suggests GPT-4's responses were often on target, supporting its reputation as a highly capable AI chatbot.

- Claude 2's Challenges: Claude 2, with a score of -75, seems to struggle significantly in this test. The 29% correct response rate is overshadowed by a high rate of incorrect answers (52%), which drastically affects its score. This might reflect a need for more nuanced training or improvements in its processing algorithms.

- Bard's Mixed Results: Bard's score of -31, with the highest correct answer rate at 53%, reflects a different challenge. Despite providing a majority of correct answers, its incorrect response rate (41%) is substantial, indicating that while Bard can offer relevant information, it also risks providing a fair amount of misinformation.

In a real-world scenario, these scores would help users determine which AI chatbot is more reliable for tasks requiring scientific accuracy. For example, a researcher or academic might lean towards GPT-4 for its balanced accuracy, while casual users might prefer Bard for its conversational approach, despite the risks of encountering more errors.

What about the originality? Let's compare:

Claude 2 vs GPT-4 vs Bard – Compare the Originality

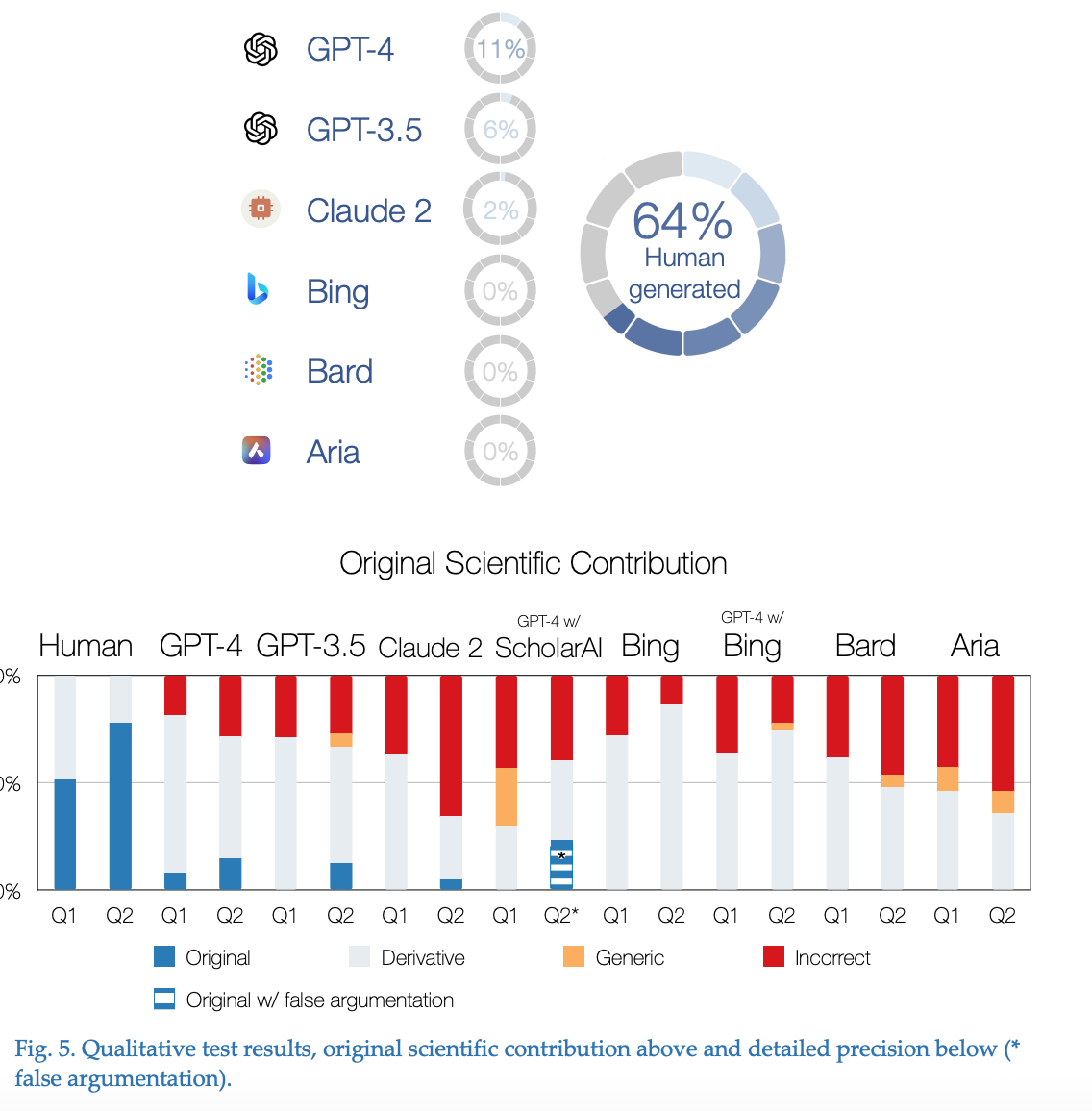

The originality percentage in the context of AI chatbot performance is a measure of how well these systems can generate new, unique, and valuable contributions in response to prompts, especially in scientific writing. This metric is particularly important in academia, where the creation of original content is often a critical part of research and publication.

Let's unpack the originality percentages for each chatbot from the study:

- GPT-4: It stands out with an 11% originality score, which, while not overwhelming, indicates a capacity to provide some level of unique insight or novel content. In the context of AI, this is a significant achievement, suggesting that GPT-4 can not only recite known information but also combine it in new ways to potentially offer fresh perspectives or ideas.

- GPT-3.5: With a 6% originality score, its predecessor GPT-3.5 shows that it too can contribute new ideas, but it's less adept at this than GPT-4. This could be due to a variety of factors, such as the number of parameters or training data differences.

- Claude 2: Claude 2's 2% score is modest, implying that while it can sometimes produce content that goes beyond mere regurgitation of known data, it's mostly limited to reiterating existing information. This suggests there's significant room for improvement in enabling Claude 2 to synthesize and innovate.

- Bard and Aria: Both of these chatbots scored 0% in originality, indicating that in the tests they didn't demonstrate the ability to generate new scientific ideas or contributions. Their responses might be accurate and informative but are derived from existing sources rather than showcasing the creation of new concepts or content.

Understanding the originality percentage is vital:

- For Researchers: This metric can help determine the suitability of a chatbot as a research assistant. A higher originality score could be more useful in brainstorming sessions or when exploring new angles in a research project.

- For Educators and Students: In educational settings, a chatbot with higher originality can aid in learning by providing unique examples or explaining concepts in novel ways.

- For Content Creators: Those in creative industries might prefer chatbots with higher originality scores for generating innovative ideas or fresh content.

So, is Claude better than GPT-4? The data suggests that GPT-4 holds the edge in accuracy and detailed knowledge. However, Claude 2's commitment to ethical AI might appeal to those who prioritize responsible AI use over sheer performance.

And when asking, how does Bard stack up against Claude? It's evident Bard takes the lead in conversational agility, but Claude 2's principled approach presents a compelling choice for those looking at the bigger picture of AI's role in society.

Feature Comparison: Google Bard vs Claude vs GPT-4

When choosing an AI chatbot, it's not just about who wins on paper—it's about which one aligns with your specific needs. Here's a table that breaks down the unique features of Claude 2, Bard, and GPT-4:

| Feature | Claude 2 | Bard | GPT-4 |

|---|---|---|---|

| Ethics | - Constitution-inspired responses | - Follows Google's AI Principles | - N/A |

| Parameters | - Not disclosed, but advanced | - Optimized version of LaMDA | - 1.8 trillion parameters |

| Strength | - Ethical considerations in responses | - Conversational agility | - Deep learning and adaptability |

| Input | - Text-based interactions | - Text-based interactions | - Text and image inputs (text in study) |

| Output | - Text responses with ethical lens | - Engaging text dialogue | - Text (and potentially image) outputs |

| Learning | - Reinforcement learning with feedback | - Utilizes Google's vast data sources | - Reinforcement learning from feedback |

| Coding | - Not its primary focus | - Not its primary focus | - Highly capable in technical tasks |

Claude 2's standout feature is its ethical framework. It's designed to consider the implications of its responses, making it potentially the most socially responsible choice.

Bard shines with its conversational prowess. Backed by Google's extensive database, it can pull information seamlessly, making for an engaging and informative interaction.

GPT-4 is the intellectual titan. With its multimodal inputs and a vast number of parameters, it's prepared to tackle the most complex queries and even take on coding challenges.

Each chatbot brings something unique to the table:

Claude 2:

- Ethical AI: Aimed at delivering not just accurate but conscientious responses.

- Special Use-Case: Ideal for scenarios where ethical considerations are paramount.

Bard:

- Conversational AI: Perfect for users looking for an AI that can simulate human-like discussions.

- Special Use-Case: Serves well in customer service and casual informational inquiries.

GPT-4:

- Deep Learning Powerhouse: Offers robust analytical capabilities and extensive knowledge.

- Special Use-Case: A strong ally for research, academic pursuits, and coding tasks.

As we delve deeper into the world of AI chatbots, it's clear that the decision is more than just a technical one—it's a choice about values, purpose, and the kind of digital interaction you seek. Whether you prioritize ethical interactions, conversational depth, or raw analytical power, there's a chatbot designed for your needs.

How many Parameters does Claude, GPT-4, Google Bard have?

The parameter count in AI models is a critical factor that often correlates with the model's complexity and capability. For Claude, GPT-4, and Bard, the number of parameters they possess reflects their potential to process information and learn from interactions.

- GPT-4 stands at the forefront with a staggering 1.8 trillion parameters. This immense count positions it as one of the most advanced AI models to date, capable of a vast array of complex tasks.

- Claude's parameters have not been publicly disclosed in precise numbers. However, it's recognized as an advanced model, suggesting a significant but unspecified number of parameters that enable its sophisticated performance.

- Bard, powered by Google's LaMDA architecture, operates with a smaller parameter count than GPT-4. While the exact number isn't specified, it's optimized to efficiently handle dialogue, likely featuring a count substantial enough to support its conversational capabilities.

In essence, GPT-4's monumental parameter count suggests a depth and breadth of knowledge and understanding that is currently unparalleled, while Claude and Bard's parameter counts, though undisclosed, enable them to perform impressively within their designed applications.

Conclusion

In conclusion, the AI chatbot landscape is characterized by a fascinating competition between Claude 2, GPT-4, and Bard, each with its unique strengths and features.

GPT-4's unparalleled parameter count positions it as a leader in complex task processing and deep learning capabilities. Claude 2, with its emphasis on ethical AI, offers a unique perspective on AI interactions, while Bard leverages Google's vast information repository for engaging conversational experiences.

In the future, we can expect these chatbots to evolve, offering even more sophisticated capabilities and applications. And don't forget that you can build your own AI app with Anakin AI now using GPT-4 or Claude with no code!

Anakin AI supports all the popular AI models, including the latest ones such as gpt-4-turbo, claude-2.1 with 200k token context window.

Built on the back of these AI models, you can create any AI App work flow with No Code at Anakin AI.

Interested? Build your own GPT-4/Claude app with Anakin AI now!👇👇👇

FAQs

Is Claude 2 better than ChatGPT?

Claude 2 offers a unique approach by integrating ethical considerations into its responses, which might be seen as a differentiator from ChatGPT, especially in contexts where ethical dialogue is prioritized.

Is Claude 2 good at coding?

While Claude 2's capabilities are advanced, its proficiency in coding tasks has not been as prominently featured as GPT-4's, which is renowned for its ability to handle complex coding challenges.

Is Claude Instant better than ChatGPT?

The comparison would depend on the context and specific use-case requirements. Claude Instant's real-time performance and ChatGPT's extensive training will serve different user needs.

How is Claude different from ChatGPT?

Claude is designed to adhere to a set of ethical guidelines, which may influence its responses to prioritize safety and fairness over other considerations, unlike ChatGPT which is optimized primarily for information accuracy and coherence.

What is special about Claude 2?

Claude 2's specialty lies in its ethical AI framework, which ensures that its interactions and content generation are aligned with values and principles that reflect global standards of AI ethics.

from Anakin Blog anakin.ai/blog/claude-2-vs-...

via IFTTT

via Anakin anakin0.blogspot.com/2023/1...